pandas.DataFrame 로드

pandas 데이터 프레임을 tf.data.Dataset에 로드하는 방법의 예제이다.

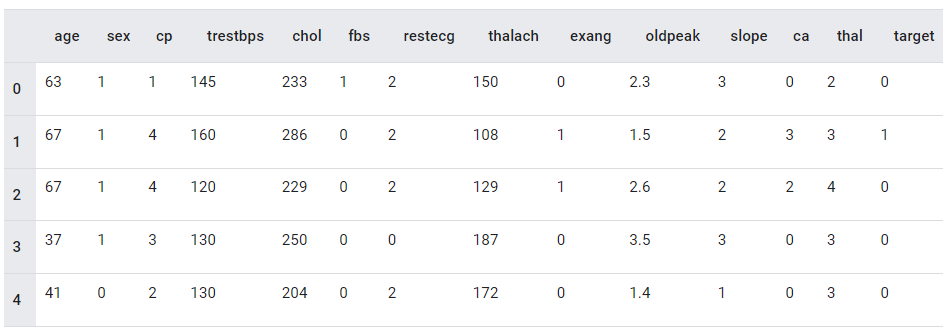

여기에서는 Cleveland Clinic Foundation for Heart Disease에서 제공하는 작은 데이터세트를 사용한다. CSV에는 수백 개의 행이 있다. 각 행은 환자를 설명하고 각 열은 속성을 설명한다. 이 정보를 사용하여 이 데이터세트에서 환자가 심장 질환이 있는지 여부를 예측하는 이진 분류 작업이다.

pandas를 사용하여 데이터 읽기

import pandas as pd

import tensorflow as tf

심장 데이터세트가 포함된 csv 파일을 다운로드한다.

csv_file = tf.keras.utils.get_file('heart.csv', 'https://storage.googleapis.com/applied-dl/heart.csv')

pandas를 사용하여 csv 파일을 읽습니다.

df = pd.read_csv(csv_file)

df.head()

df.dtypes

age int64

sex int64

cp int64

trestbps int64

chol int64

fbs int64

restecg int64

thalach int64

exang int64

oldpeak float64

slope int64

ca int64

thal object

target int64

dtype: object

데이터 프레임의 object thal 열을 이산 숫자 값으로 변환한다.

df['thal'] = pd.Categorical(df['thal'])

df['thal'] = df.thal.cat.codes

df.head()

tf.data.Dataset를 사용하여 데이터 로드하기

tf.data.Dataset.from_tensor_slices를 사용하여 pandas 데이터 프레임에서 값을 읽는다.

tf.data.Dataset를 사용할 때의 이점 중 하나는 간단하고 효율적인 데이터 파이프라인을 작성할 수 있다는 것이다. 자세한 내용은 데이터 로드 가이드를 참조하면 된다.

target = df.pop('target')

dataset = tf.data.Dataset.from_tensor_slices((df.values, target.values))

for feat, targ in dataset.take(5):

print ('Features: {}, Target: {}'.format(feat, targ))

Features: [ 63. 1. 1. 145. 233. 1. 2. 150. 0. 2.3 3. 0.

2. ], Target: 0

Features: [ 67. 1. 4. 160. 286. 0. 2. 108. 1. 1.5 2. 3.

3. ], Target: 1

Features: [ 67. 1. 4. 120. 229. 0. 2. 129. 1. 2.6 2. 2.

4. ], Target: 0

Features: [ 37. 1. 3. 130. 250. 0. 0. 187. 0. 3.5 3. 0.

3. ], Target: 0

Features: [ 41. 0. 2. 130. 204. 0. 2. 172. 0. 1.4 1. 0.

3. ], Target: 0

pd.Series는 __array__ 프로토콜을 구현하므로 np.array 또는 tf.Tensor를 사용하는 거의 모든 곳에서 투명하게 사용할 수 있다.

tf.constant(df['thal'])

<tf.Tensor: shape=(303,), dtype=int8, numpy=

array([2, 3, 4, 3, 3, 3, 3, 3, 4, 4, 2, 3, 2, 4, 4, 3, 4, 3, 3, 3, 3, 3,

3, 4, 4, 3, 3, 3, 3, 4, 3, 4, 3, 4, 3, 3, 4, 2, 4, 3, 4, 3, 4, 4,

2, 3, 3, 4, 3, 3, 4, 3, 3, 3, 4, 3, 3, 3, 3, 3, 3, 4, 4, 3, 3, 4,

4, 2, 3, 3, 4, 3, 4, 3, 3, 4, 4, 3, 3, 4, 4, 3, 3, 3, 3, 4, 4, 4,

3, 3, 4, 3, 4, 4, 3, 4, 3, 3, 3, 4, 3, 4, 4, 3, 3, 4, 4, 4, 4, 4,

3, 3, 3, 3, 4, 3, 4, 3, 4, 4, 3, 3, 2, 4, 4, 2, 3, 3, 4, 4, 3, 4,

3, 3, 4, 2, 4, 4, 3, 4, 3, 3, 3, 3, 3, 3, 3, 3, 3, 4, 4, 4, 4, 4,

4, 3, 3, 3, 4, 3, 4, 3, 4, 3, 3, 3, 3, 3, 3, 3, 4, 3, 3, 3, 3, 3,

3, 3, 3, 3, 3, 3, 3, 4, 4, 3, 3, 3, 3, 3, 3, 3, 3, 4, 3, 4, 3, 2,

4, 4, 3, 3, 3, 3, 3, 3, 4, 3, 3, 3, 3, 3, 2, 2, 4, 3, 4, 2, 4, 3,

3, 4, 3, 3, 3, 3, 4, 3, 4, 3, 4, 2, 2, 4, 3, 4, 3, 2, 4, 3, 3, 2,

4, 4, 4, 4, 3, 0, 3, 3, 3, 3, 1, 4, 3, 3, 3, 4, 3, 4, 3, 3, 3, 4,

3, 3, 4, 4, 4, 4, 3, 3, 4, 3, 4, 3, 4, 4, 3, 4, 4, 3, 4, 4, 3, 3,

3, 3, 3, 3, 4, 4, 4, 4, 4, 4, 4, 3, 2, 4, 4, 4, 4], dtype=int8)>

데이터세트를 섞고 배치 처리한다.

train_dataset = dataset.shuffle(len(df)).batch(1)

모델 생성 및 훈련

def get_compiled_model():

model = tf.keras.Sequential([

tf.keras.layers.Dense(10, activation='relu'),

tf.keras.layers.Dense(10, activation='relu'),

tf.keras.layers.Dense(1)

])

model.compile(optimizer='adam',

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=['accuracy'])

return model

model = get_compiled_model()

model.fit(train_dataset, epochs=15)

Epoch 1/15

WARNING:tensorflow:Layer dense is casting an input tensor from dtype float64 to the layer's dtype of float32, which is new behavior in TensorFlow 2. The layer has dtype float32 because its dtype defaults to floatx.

If you intended to run this layer in float32, you can safely ignore this warning. If in doubt, this warning is likely only an issue if you are porting a TensorFlow 1.X model to TensorFlow 2.

To change all layers to have dtype float64 by default, call `tf.keras.backend.set_floatx('float64')`. To change just this layer, pass dtype='float64' to the layer constructor. If you are the author of this layer, you can disable autocasting by passing autocast=False to the base Layer constructor.

303/303 [==============================] - 1s 2ms/step - loss: 1.5284 - accuracy: 0.6502

Epoch 2/15

303/303 [==============================] - 1s 2ms/step - loss: 0.6147 - accuracy: 0.7492

Epoch 3/15

303/303 [==============================] - 1s 2ms/step - loss: 0.5727 - accuracy: 0.7195

Epoch 4/15

303/303 [==============================] - 1s 2ms/step - loss: 0.5679 - accuracy: 0.7294

Epoch 5/15

303/303 [==============================] - 1s 2ms/step - loss: 0.5893 - accuracy: 0.7393

Epoch 6/15

303/303 [==============================] - 1s 2ms/step - loss: 0.5484 - accuracy: 0.7327

Epoch 7/15

303/303 [==============================] - 1s 2ms/step - loss: 0.5191 - accuracy: 0.7492

Epoch 8/15

303/303 [==============================] - 1s 2ms/step - loss: 0.5487 - accuracy: 0.7360

Epoch 9/15

303/303 [==============================] - 1s 2ms/step - loss: 0.5523 - accuracy: 0.7624

Epoch 10/15

303/303 [==============================] - 1s 2ms/step - loss: 0.5234 - accuracy: 0.7459

Epoch 11/15

303/303 [==============================] - 1s 2ms/step - loss: 0.5043 - accuracy: 0.7723

Epoch 12/15

303/303 [==============================] - 1s 2ms/step - loss: 0.5106 - accuracy: 0.7492

Epoch 13/15

303/303 [==============================] - 1s 2ms/step - loss: 0.4850 - accuracy: 0.7657

Epoch 14/15

303/303 [==============================] - 1s 2ms/step - loss: 0.4885 - accuracy: 0.7624

Epoch 15/15

303/303 [==============================] - 1s 2ms/step - loss: 0.4640 - accuracy: 0.7954

<tensorflow.python.keras.callbacks.History at 0x7fe8cc0fe518>

특성 열의 대안

사전을 모델에 대한 입력으로 전달하는 것은 tf.keras.layers.Input 레이어의 일치하는 사전을 작성하는 것만큼 간편하며, 함수형 API를 사용하여 필요한 사전 처리를 적용하고 레이어를 쌓는다. 특성 열의 대안으로 사용할 수 있다.

inputs = {key: tf.keras.layers.Input(shape=(), name=key) for key in df.keys()}

x = tf.stack(list(inputs.values()), axis=-1)

x = tf.keras.layers.Dense(10, activation='relu')(x)

output = tf.keras.layers.Dense(1)(x)

model_func = tf.keras.Model(inputs=inputs, outputs=output)

model_func.compile(optimizer='adam',

loss=tf.keras.losses.BinaryCrossentropy(from_logits=True),

metrics=['accuracy'])

tf.data와 함께 사용했을 때 pd.DataFrame의 열 구조를 유지하는 가장 쉬운 방법은 pd.DataFrame을 dict로 변환하고 해당 사전을 조각화하는 것이다.

dict_slices = tf.data.Dataset.from_tensor_slices((df.to_dict('list'), target.values)).batch(16)

for dict_slice in dict_slices.take(1):

print (dict_slice)

({'age': <tf.Tensor: shape=(16,), dtype=int32, numpy=

array([63, 67, 67, 37, 41, 56, 62, 57, 63, 53, 57, 56, 56, 44, 52, 57],

dtype=int32)>, 'sex': <tf.Tensor: shape=(16,), dtype=int32, numpy=array([1, 1, 1, 1, 0, 1, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1], dtype=int32)>, 'cp': <tf.Tensor: shape=(16,), dtype=int32, numpy=array([1, 4, 4, 3, 2, 2, 4, 4, 4, 4, 4, 2, 3, 2, 3, 3], dtype=int32)>, 'trestbps': <tf.Tensor: shape=(16,), dtype=int32, numpy=

array([145, 160, 120, 130, 130, 120, 140, 120, 130, 140, 140, 140, 130,

120, 172, 150], dtype=int32)>, 'chol': <tf.Tensor: shape=(16,), dtype=int32, numpy=

array([233, 286, 229, 250, 204, 236, 268, 354, 254, 203, 192, 294, 256,

263, 199, 168], dtype=int32)>, 'fbs': <tf.Tensor: shape=(16,), dtype=int32, numpy=array([1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 1, 0], dtype=int32)>, 'restecg': <tf.Tensor: shape=(16,), dtype=int32, numpy=array([2, 2, 2, 0, 2, 0, 2, 0, 2, 2, 0, 2, 2, 0, 0, 0], dtype=int32)>, 'thalach': <tf.Tensor: shape=(16,), dtype=int32, numpy=

array([150, 108, 129, 187, 172, 178, 160, 163, 147, 155, 148, 153, 142,

173, 162, 174], dtype=int32)>, 'exang': <tf.Tensor: shape=(16,), dtype=int32, numpy=array([0, 1, 1, 0, 0, 0, 0, 1, 0, 1, 0, 0, 1, 0, 0, 0], dtype=int32)>, 'oldpeak': <tf.Tensor: shape=(16,), dtype=float32, numpy=

array([2.3, 1.5, 2.6, 3.5, 1.4, 0.8, 3.6, 0.6, 1.4, 3.1, 0.4, 1.3, 0.6,

0. , 0.5, 1.6], dtype=float32)>, 'slope': <tf.Tensor: shape=(16,), dtype=int32, numpy=array([3, 2, 2, 3, 1, 1, 3, 1, 2, 3, 2, 2, 2, 1, 1, 1], dtype=int32)>, 'ca': <tf.Tensor: shape=(16,), dtype=int32, numpy=array([0, 3, 2, 0, 0, 0, 2, 0, 1, 0, 0, 0, 1, 0, 0, 0], dtype=int32)>, 'thal': <tf.Tensor: shape=(16,), dtype=int32, numpy=array([2, 3, 4, 3, 3, 3, 3, 3, 4, 4, 2, 3, 2, 4, 4, 3], dtype=int32)>}, <tf.Tensor: shape=(16,), dtype=int64, numpy=array([0, 1, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 1, 0, 0, 0])>)

model_func.fit(dict_slices, epochs=15)

Epoch 1/15

19/19 [==============================] - 0s 3ms/step - loss: 12.8710 - accuracy: 0.7261

Epoch 2/15

19/19 [==============================] - 0s 3ms/step - loss: 7.1240 - accuracy: 0.7261

Epoch 3/15

19/19 [==============================] - 0s 3ms/step - loss: 3.2908 - accuracy: 0.5611

Epoch 4/15

19/19 [==============================] - 0s 3ms/step - loss: 3.3019 - accuracy: 0.4917

Epoch 5/15

19/19 [==============================] - 0s 3ms/step - loss: 2.8981 - accuracy: 0.5446

Epoch 6/15

19/19 [==============================] - 0s 3ms/step - loss: 2.8607 - accuracy: 0.5050

Epoch 7/15

19/19 [==============================] - 0s 3ms/step - loss: 2.6697 - accuracy: 0.5248

Epoch 8/15

19/19 [==============================] - 0s 3ms/step - loss: 2.5670 - accuracy: 0.5347

Epoch 9/15

19/19 [==============================] - 0s 3ms/step - loss: 2.4257 - accuracy: 0.5380

Epoch 10/15

19/19 [==============================] - 0s 3ms/step - loss: 2.3069 - accuracy: 0.5347

Epoch 11/15

19/19 [==============================] - 0s 3ms/step - loss: 2.1820 - accuracy: 0.5380

Epoch 12/15

19/19 [==============================] - 0s 3ms/step - loss: 2.0643 - accuracy: 0.5512

Epoch 13/15

19/19 [==============================] - 0s 3ms/step - loss: 1.9490 - accuracy: 0.5545

Epoch 14/15

19/19 [==============================] - 0s 3ms/step - loss: 1.8382 - accuracy: 0.5644

Epoch 15/15

19/19 [==============================] - 0s 3ms/step - loss: 1.7317 - accuracy: 0.5611

<tensorflow.python.keras.callbacks.History at 0x7fe8847e7e48>

pandas.DataFrame 로드 | TensorFlow Core

Google I/O는 끝입니다! TensorFlow 세션 확인하기 세션 보기 pandas.DataFrame 로드 이 튜토리얼은 pandas 데이터 프레임을 tf.data.Dataset에 로드하는 방법의 예제를 제공합니다. 이 튜토리얼에서는 Cleveland Clin

www.tensorflow.org

'DNN with Keras > TensorFlow' 카테고리의 다른 글

| TensorFlow Lite (1) (0) | 2022.08.23 |

|---|---|

| [TensorFlow] 텐서 작업 (0) | 2022.06.20 |

| [TensorFlow] NumPy 전처리 (0) | 2022.06.16 |

| [TensorFlow] CSV 전처리 (2) (0) | 2022.06.16 |

| [TensorFlow] CSV 전처리 (1) (0) | 2022.06.16 |