Task #2 : Classification between Unpleasant and Pleasant Events

# Prepare dataset

# Dataset with unpleasant and pleasant events

data_UP = epochs_UP.get_data()

labels_UP = epochs_UP.events[:,-1]

train_data_UP, test_data_UP, labels_train_UP, labels_test_UP = train_test_split(data_UP, labels_UP, test_size=0.3, random_state=42)

첫 번째 예에서와 같이 SVM, LR 및 LDA 모델을 빌드한다.

# SVM

clf_svm_pip = make_pipeline(Vectorizer(), StandardScaler(), svm.SVC(random_state=42))

parameters = {'svc__kernel':['linear', 'rbf', 'sigmoid'], 'svc__C':[0.1, 1, 10]}

gs_cv_svm = GridSearchCV(clf_svm_pip, parameters, scoring='accuracy', cv=StratifiedKFold(n_splits=5), return_train_score=True)

gs_cv_svm.fit(train_data_UP, labels_train_UP)

print('Best Parameters: {}'.format(gs_cv_svm.best_params_))

print('Best Score: {}'.format(gs_cv_svm.best_score_))

# Make prediction

predictions_svm = gs_cv_svm.predict(test_data_UP)

#Evaluation

report_svm = classification_report(labels_test_UP, predictions_svm, target_names=['Unpleasant', 'Pleasant'])

print('SVM Clasification Report:\n {}'.format(report_svm))

acc_svm = accuracy_score(labels_test_UP, predictions_svm)

print("Accuracy of SVM model: {}".format(acc_svm))

precision_svm,recall_svm,fscore_svm,support_svm=precision_recall_fscore_support(labels_test_UP,predictions_svm,average='macro')

print('Precision: {0}, Recall: {1}, f1-score:{2}'.format(precision_svm,recall_svm,fscore_svm))Best Parameters: {'svc__C': 0.1, 'svc__kernel': 'linear'}

Best Score: 0.6927374301675978

SVM Clasification Report:

precision recall f1-score support

Unpleasant 0.68 0.70 0.69 46

Pleasant 0.53 0.52 0.52 31

accuracy 0.62 77

macro avg 0.61 0.61 0.61 77

weighted avg 0.62 0.62 0.62 77

Accuracy of SVM model: 0.6233766233766234

Precision: 0.6070921985815603, Recall: 0.605890603085554, f1-score:0.6063811034725894#Logistic Regression

clf_lr_pip = make_pipeline(Vectorizer(), StandardScaler(), LogisticRegression(random_state=42))

parameters = {'logisticregression__penalty':['l1', 'l2']}

gs_cv_lr = GridSearchCV(clf_lr_pip, parameters, scoring='accuracy')

gs_cv_lr.fit(train_data_UP, labels_train_UP)

print('Best Parameters: {}'.format(gs_cv_lr.best_params_))

print('Best Score: {}'.format(gs_cv_lr.best_score_))

# Prediction

predictions_lr = gs_cv_lr.predict(test_data_UP)

#Evaluation

report_lr = classification_report(labels_test_UP, predictions_lr, target_names=['Unpleasant', 'Pleasant'])

print('LR Clasification Report:\n {}'.format(report_lr))

acc_lr = accuracy_score(labels_test_UP, predictions_lr)

print("Accuracy of LR model: {}".format(acc_lr))

precision_lr,recall_lr,fscore_lr,support_lr=precision_recall_fscore_support(labels_test_UP,predictions_lr,average='macro')

print('Precision: {0}, Recall: {1}, f1-score:{2}'.format(precision_lr,recall_lr,fscore_lr))Best Parameters: {'logisticregression__penalty': 'l1'}

Best Score: 0.7039106145251397

LR Clasification Report:

precision recall f1-score support

Unpleasant 0.76 0.74 0.75 46

Pleasant 0.62 0.65 0.63 31

accuracy 0.70 77

macro avg 0.69 0.69 0.69 77

weighted avg 0.70 0.70 0.70 77

Accuracy of LR model: 0.7012987012987013

Precision: 0.6902777777777778, Recall: 0.6921458625525947, f1-score:0.6910866910866911#LDA

clf_lda_pip = make_pipeline(Vectorizer(), StandardScaler(), LinearDiscriminantAnalysis(solver='svd'))

clf_lda_pip.fit(train_data_UP,labels_train_UP)

#Prediction

predictions_lda = clf_lda_pip.predict(test_data_UP)

#Evaluation

report_lda = classification_report(labels_test_UP, predictions_lda, target_names=['Unpleasant', 'Plesant'])

print('LDA Clasification Report:\n {}'.format(report_lda))

acc_lda = accuracy_score(labels_test_UP, predictions_lda)

print("Accuracy of LDA model: {}".format(acc_lda))

precision_lda,recall_lda,fscore_lda,support_lda=precision_recall_fscore_support(labels_test_UP,predictions_lda,average='macro')

print('Precision: {0}, Recall: {1}, f1-score:{2}'.format(precision_lda,recall_lda,fscore_lda))LDA Clasification Report:

precision recall f1-score support

Unpleasant 0.68 0.65 0.67 46

Plesant 0.52 0.55 0.53 31

accuracy 0.61 77

macro avg 0.60 0.60 0.60 77

weighted avg 0.61 0.61 0.61 77

Accuracy of LDA model: 0.6103896103896104

Precision: 0.5984848484848484, Recall: 0.6002805049088359, f1-score:0.5989583333333333accuracies.append([acc_svm, acc_lr, acc_lda])

f1_scores.append([fscore_svm, fscore_lr, fscore_lda])

Task #3 : Classification between Neutral and Pleasant Events

# Dataset with neutral and pleasant events

data_NP = epochs_NP.get_data()

labels_NP = epochs_NP.events[:,-1]

train_data_NP, test_data_NP, labels_train_NP, labels_test_NP = train_test_split(data_NP, labels_NP, test_size=0.3, random_state=42)

# SVM

clf_svm_pip = make_pipeline(Vectorizer(), StandardScaler(), svm.SVC(random_state=42))

parameters = {'svc__kernel':['linear', 'rbf', 'sigmoid'], 'svc__C':[0.1, 1, 10]}

gs_cv_svm = GridSearchCV(clf_svm_pip, parameters, scoring='accuracy', cv=StratifiedKFold(n_splits=5), return_train_score=True)

gs_cv_svm.fit(train_data_NP, labels_train_NP)

print('Best Parameters: {}'.format(gs_cv_svm.best_params_))

print('Best Score: {}'.format(gs_cv_svm.best_score_))

# Prediction

predictions_svm = gs_cv_svm.predict(test_data_NP)

#Evaluation

report_svm = classification_report(labels_test_NP, predictions_svm, target_names=['Neutral', 'Pleasant'])

print('SVM Clasification Report:\n {}'.format(report_svm))

acc_svm = accuracy_score(labels_test_NP, predictions_svm)

print("Accuracy of SVM model: {}".format(acc_svm))

precision_svm,recall_svm,fscore_svm,support_svm=precision_recall_fscore_support(labels_test_NP,predictions_svm,average='macro')

print('Precision: {0}, Recall: {1}, f1-score:{2}'.format(precision_svm,recall_svm,fscore_svm))Best Parameters: {'svc__C': 0.1, 'svc__kernel': 'linear'}

Best Score: 0.574585635359116

SVM Clasification Report:

precision recall f1-score support

Neutral 0.75 0.63 0.68 43

Pleasant 0.62 0.74 0.68 35

accuracy 0.68 78

macro avg 0.68 0.69 0.68 78

weighted avg 0.69 0.68 0.68 78

Accuracy of SVM model: 0.6794871794871795

Precision: 0.6845238095238095, Recall: 0.6853820598006645, f1-score:0.6794344895610718#Logistic Regression

clf_lr_pip = make_pipeline(Vectorizer(), StandardScaler(), LogisticRegression(random_state=42))

parameters = {'logisticregression__penalty':['l1', 'l2']}

gs_cv_lr = GridSearchCV(clf_lr_pip, parameters, scoring='accuracy')

gs_cv_lr.fit(train_data_NP, labels_train_NP)

print('Best Parameters: {}'.format(gs_cv_lr.best_params_))

print('Best Score: {}'.format(gs_cv_lr.best_score_))

# Prediction

predictions_lr = gs_cv_lr.predict(test_data_NP)

#Evaluation

report_lr = classification_report(labels_test_NP, predictions_lr, target_names=['Neutral', 'Pleasant'])

print('LR Clasification Report:\n {}'.format(report_lr))

acc_lr = accuracy_score(labels_test_NP, predictions_lr)

print("Accuracy of LR model: {}".format(acc_lr))

precision_lr,recall_lr,fscore_lr,support_lr=precision_recall_fscore_support(labels_test_NP,predictions_lr,average='macro')

print('Precision: {0}, Recall: {1}, f1-score:{2}'.format(precision_lr,recall_lr,fscore_lr))Best Parameters: {'logisticregression__penalty': 'l1'}

Best Score: 0.6795580110497238

LR Clasification Report:

precision recall f1-score support

Neutral 0.80 0.77 0.79 43

Pleasant 0.73 0.77 0.75 35

accuracy 0.77 78

macro avg 0.77 0.77 0.77 78

weighted avg 0.77 0.77 0.77 78

Accuracy of LR model: 0.7692307692307693

Precision: 0.7673038892551087, Recall: 0.7694352159468438, f1-score:0.7678571428571429clf_lda_pip = make_pipeline(Vectorizer(), StandardScaler(), LinearDiscriminantAnalysis(solver='svd'))

clf_lda_pip.fit(train_data_NP,labels_train_NP)

#Prediction

predictions_lda = clf_lda_pip.predict(test_data_NP)

#Evaluation

report_lda = classification_report(labels_test_NP, predictions_lda, target_names=['Neutral', 'Plesant'])

print('LDA Clasification Report:\n {}'.format(report_lda))

acc_lda = accuracy_score(labels_test_NP, predictions_lda)

print("Accuracy of LDA model: {}".format(acc_lda))

precision_lda,recall_lda,fscore_lda,support_lda=precision_recall_fscore_support(labels_test_NP,predictions_lda,average='macro')

print('Precision: {0}, Recall: {1}, f1-score:{2}'.format(precision_lda,recall_lda,fscore_lda))LDA Clasification Report:

precision recall f1-score support

Neutral 0.71 0.58 0.64 43

Plesant 0.58 0.71 0.64 35

accuracy 0.64 78

macro avg 0.65 0.65 0.64 78

weighted avg 0.65 0.64 0.64 78

Accuracy of LDA model: 0.6410256410256411

Precision: 0.6478405315614618, Recall: 0.6478405315614618, f1-score:0.6410256410256411accuracies.append([acc_svm, acc_lr, acc_lda])

f1_scores.append([fscore_svm, fscore_lr, fscore_lda])

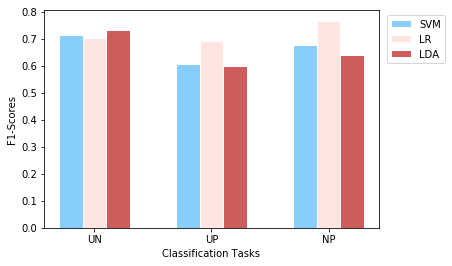

세 가지 작업 모두의 정확도 값을 함께 보여주기 위해 막대 플롯을 사용한다.

import matplotlib

import matplotlib.pyplot as plt

import numpy as np

%matplotlib inline

def plotEvalMetrics(tasks, labels, evalMetric, metricName):

width = 0.2 # the width of the bars

# Set position of bar on X axis

rects1 = np.arange(len(evalMetric[:][0]))

rects2 = [x + width for x in rects1]

rects3 = [x + width for x in rects2]

plt.bar(rects1, list(zip(*evalMetric))[0], color='#87CEFA', width=width, edgecolor='white', label=labels[0])

plt.bar(rects2, list(zip(*evalMetric))[1], color='#FFE4E1', width=width, edgecolor='white', label=labels[1])

plt.bar(rects3, list(zip(*evalMetric))[2], color='#CD5C5C', width=width, edgecolor='white', label=labels[2])

plt.xlabel('Classification Tasks')

plt.xticks([r + width for r in range(len(evalMetric[:][0]))], tasks)

plt.ylabel(metricName)

plt.legend(bbox_to_anchor=(1.01, 1), loc='upper left', )

plt.show()

#Plot Accuracies

tasks = ['UN', 'UP', 'NP']

labels = ['SVM', 'LR', 'LDA']

plotEvalMetrics(tasks, labels, accuracies, 'Accuracy')

print(accuracies)

[[0.72, 0.7066666666666667, 0.7333333333333333], [0.6233766233766234, 0.7012987012987013, 0.6103896103896104], [0.6794871794871795, 0.7692307692307693, 0.6410256410256411]]#Plot F1 Scores

tasks = ['UN', 'UP', 'NP']

labels = ['SVM', 'LR', 'LDA']

plotEvalMetrics(tasks, labels, f1_scores, 'F1-Scores')

Overview

단일 참가자 수준에서 세 가지 다른 조건에 대한 EEG 응답을 분류하기 위해 세 가지 분류기를 훈련한다. 모델에서 얻은 테스트 정확도 값은 위의 첫 번째 플롯에 제공된다. 결과에 따르면 LDA는 불쾌하고 중립적인 사건의 분류인 첫 번째 작업에서 최고의 성능을 제공했다. 불쾌하고 즐거운 사건의 분류를 위해 로지스틱 회귀는 눈에 띄는 차이로 다른 모델을 능가하는 최고의 성능 모델이다. 중립적이고 즐거운 이벤트를 분류하는 세 번째 작업의 경우 정확도 값은 모델마다 다르며 로지스틱 회귀가 단연 최고의 성능 모델이다. 지금까지는 특정 모델이 특정 작업에서 더 나은 성능을 보였다고 언급했지만 누구도 77% 이상에 도달할 수 없었다.

정확도는 기계 학습 모델에 대한 가장 일반적인 평가 메트릭 중 하나이지만 모델이 다른 모델보다 성능이 좋다는 결론을 내리는 것만으로는 충분하지 않다. 어떤 경우에는 사기일 수 있다. 예를 들어 모델이 대부분의 인스턴스를 하나의 클래스로 분류할 때 클래스의 불균형이 심한 경우 정확도가 여전히 높을 수 있다. 또 다른 경우는 위양성과 위음성의 결과가 다른 경우입니다. 특히 의료 영역에서 이 경우는 모델을 평가할 때 중요한 측면이다. 따라서 정확도 외에 정확도와 재현율을 결합한 지표인 정확도, 재현율 및 f1-score도 고려해야 한다.

Single-Participant Analysis

Single-Participant Analysis Tutorial #4: Machine Learning Algorithms for EEG Data of Single Participants In this tutorial, we will introduce how m...

neuro.inf.unibe.ch

'Brain Engineering > MNE' 카테고리의 다른 글

| Machine Learning : Group-Level Analysis (Part - 1) (2) (0) | 2022.04.05 |

|---|---|

| Machine Learning : Group-Level Analysis (Part - 1) (1) (0) | 2022.04.05 |

| Machine Learning : Single-Participant Analysis (1) (0) | 2022.04.05 |

| Machine Learning : Formatting a dataset (0) | 2022.04.05 |

| Preprocessing : Choosing a Reference (0) | 2022.04.05 |