오토인코더 (Autoencoder)

오토인코더는 인코더를 통해 입력을 신호로 변환한 다음 다시 디코더를 통해 레이블 따위를 만들어내는 비지도 학습기법이다. 다시 말해, 오토인코더는 고차원의 정보를 압축해 주는 인코더와 압축된 정보를 다시 원래 정보로 돌려주는 디코더로 이루어져 있다.

원본 데이터에서 인코더를 거쳐 압축된 정보로 변환한 뒤, 다시 디코더를 거쳐 원본 데이터로 복구하는 작업을 한다. 오토인코더 모델은 인코더 - 디코더의 결합된 형태로 만들어진다. 나중에 디코더만 따로 분리하여 압축된 정보를 입력으로 주게 되면, 알아서 원본 이미지와 유사한 마치 Fake 이미지를 만들어 주도록 유도할 수도 있다.

오토인코더의 손실은 MNIST의 28 X 28 이미지 각각의 pixel 값에 대하여 원본과 디코딩된 데이터 간의 MSE 오차를 적용하여 학습한다. 학습 데이터 또한, 원본 데이터를 그대로 label 데이터로 활용한다. 왜냐하면, Input Data와 Output Data 간의 차이를 줄이는 데에 모델의 성능이 달려 있다. 오차를 줄이면 줄일수록 복원되는 이미지는 원본 데이터에 가깝게 복원된다.

import keras

from keras import layers

# This is the size of our encoded representations

encoding_dim = 32 # 32 floats -> compression of factor 24.5, assuming the input is 784 floats

# This is our input image

input_img = keras.Input(shape=(784,))

# "encoded" is the encoded representation of the input

encoded = layers.Dense(encoding_dim, activation='relu')(input_img)

# "decoded" is the lossy reconstruction of the input

decoded = layers.Dense(784, activation='sigmoid')(encoded)

# This model maps an input to its reconstruction

autoencoder = keras.Model(input_img, decoded)# This model maps an input to its encoded representation

encoder = keras.Model(input_img, encoded)# This is our encoded (32-dimensional) input

encoded_input = keras.Input(shape=(encoding_dim,))

# Retrieve the last layer of the autoencoder model

decoder_layer = autoencoder.layers[-1]

# Create the decoder model

decoder = keras.Model(encoded_input, decoder_layer(encoded_input))autoencoder.compile(optimizer='adam', loss='binary_crossentropy')from keras.datasets import mnist

import numpy as np

(x_train, _), (x_test, _) = mnist.load_data()x_train = x_train.astype('float32') / 255.

x_test = x_test.astype('float32') / 255.

x_train = x_train.reshape((len(x_train), np.prod(x_train.shape[1:])))

x_test = x_test.reshape((len(x_test), np.prod(x_test.shape[1:])))

print(x_train.shape)

print(x_test.shape)autoencoder.fit(x_train, x_train,

epochs=50,

batch_size=256,

shuffle=True,

validation_data=(x_test, x_test))# Encode and decode some digits

# Note that we take them from the *test* set

encoded_imgs = encoder.predict(x_test)

decoded_imgs = decoder.predict(encoded_imgs)import matplotlib.pyplot as plt

n = 10 # How many digits we will display

plt.figure(figsize=(20, 4))

for i in range(n):

# Display original

ax = plt.subplot(2, n, i + 1)

plt.imshow(x_test[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

# Display reconstruction

ax = plt.subplot(2, n, i + 1 + n)

plt.imshow(decoded_imgs[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

plt.show()

인코딩된 표현에 희소성 제약 조건 추가

from keras import regularizers

encoding_dim = 32

input_img = keras.Input(shape=(784,))

# Add a Dense layer with a L1 activity regularizer

encoded = layers.Dense(encoding_dim, activation='relu',

activity_regularizer=regularizers.l1(10e-5))(input_img)

decoded = layers.Dense(784, activation='sigmoid')(encoded)

autoencoder = keras.Model(input_img, decoded)

Deep autoencoder

input_img = keras.Input(shape=(784,))

encoded = layers.Dense(128, activation='relu')(input_img)

encoded = layers.Dense(64, activation='relu')(encoded)

encoded = layers.Dense(32, activation='relu')(encoded)

decoded = layers.Dense(64, activation='relu')(encoded)

decoded = layers.Dense(128, activation='relu')(decoded)

decoded = layers.Dense(784, activation='sigmoid')(decoded)autoencoder = keras.Model(input_img, decoded)

autoencoder.compile(optimizer='adam', loss='binary_crossentropy')

autoencoder.fit(x_train, x_train,

epochs=100,

batch_size=256,

shuffle=True,

validation_data=(x_test, x_test))

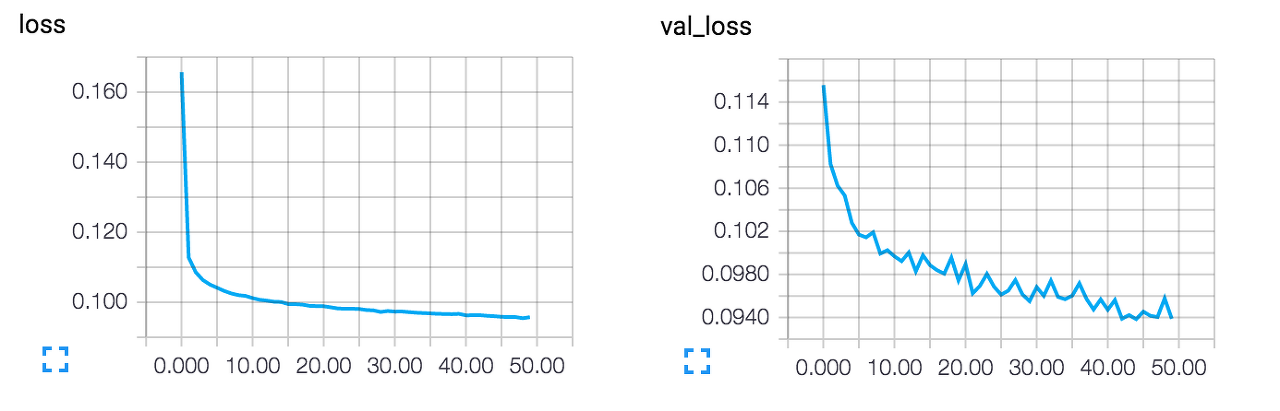

Convolutional autoencoder

import keras

from keras import layers

input_img = keras.Input(shape=(28, 28, 1))

x = layers.Conv2D(16, (3, 3), activation='relu', padding='same')(input_img)

x = layers.MaxPooling2D((2, 2), padding='same')(x)

x = layers.Conv2D(8, (3, 3), activation='relu', padding='same')(x)

x = layers.MaxPooling2D((2, 2), padding='same')(x)

x = layers.Conv2D(8, (3, 3), activation='relu', padding='same')(x)

encoded = layers.MaxPooling2D((2, 2), padding='same')(x)

# at this point the representation is (4, 4, 8) i.e. 128-dimensional

x = layers.Conv2D(8, (3, 3), activation='relu', padding='same')(encoded)

x = layers.UpSampling2D((2, 2))(x)

x = layers.Conv2D(8, (3, 3), activation='relu', padding='same')(x)

x = layers.UpSampling2D((2, 2))(x)

x = layers.Conv2D(16, (3, 3), activation='relu')(x)

x = layers.UpSampling2D((2, 2))(x)

decoded = layers.Conv2D(1, (3, 3), activation='sigmoid', padding='same')(x)

autoencoder = keras.Model(input_img, decoded)

autoencoder.compile(optimizer='adam', loss='binary_crossentropy')from keras.datasets import mnist

import numpy as np

(x_train, _), (x_test, _) = mnist.load_data()

x_train = x_train.astype('float32') / 255.

x_test = x_test.astype('float32') / 255.

x_train = np.reshape(x_train, (len(x_train), 28, 28, 1))

x_test = np.reshape(x_test, (len(x_test), 28, 28, 1))tensorboard --logdir=/tmp/autoencoderfrom keras.callbacks import TensorBoard

autoencoder.fit(x_train, x_train,

epochs=50,

batch_size=128,

shuffle=True,

validation_data=(x_test, x_test),

callbacks=[TensorBoard(log_dir='/tmp/autoencoder')])

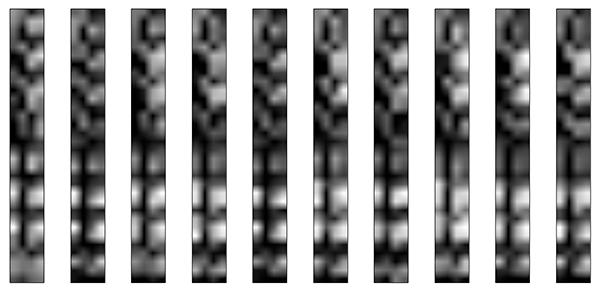

decoded_imgs = autoencoder.predict(x_test)

n = 10

plt.figure(figsize=(20, 4))

for i in range(1, n + 1):

# Display original

ax = plt.subplot(2, n, i)

plt.imshow(x_test[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

# Display reconstruction

ax = plt.subplot(2, n, i + n)

plt.imshow(decoded_imgs[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

plt.show()

이미지 노이즈 제거

from keras.datasets import mnist

import numpy as np

(x_train, _), (x_test, _) = mnist.load_data()

x_train = x_train.astype('float32') / 255.

x_test = x_test.astype('float32') / 255.

x_train = np.reshape(x_train, (len(x_train), 28, 28, 1))

x_test = np.reshape(x_test, (len(x_test), 28, 28, 1))

noise_factor = 0.5

x_train_noisy = x_train + noise_factor * np.random.normal(loc=0.0, scale=1.0, size=x_train.shape)

x_test_noisy = x_test + noise_factor * np.random.normal(loc=0.0, scale=1.0, size=x_test.shape)

x_train_noisy = np.clip(x_train_noisy, 0., 1.)

x_test_noisy = np.clip(x_test_noisy, 0., 1.)n = 10

plt.figure(figsize=(20, 2))

for i in range(1, n + 1):

ax = plt.subplot(1, n, i)

plt.imshow(x_test_noisy[i].reshape(28, 28))

plt.gray()

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

plt.show()

input_img = keras.Input(shape=(28, 28, 1))

x = layers.Conv2D(32, (3, 3), activation='relu', padding='same')(input_img)

x = layers.MaxPooling2D((2, 2), padding='same')(x)

x = layers.Conv2D(32, (3, 3), activation='relu', padding='same')(x)

encoded = layers.MaxPooling2D((2, 2), padding='same')(x)

# At this point the representation is (7, 7, 32)

x = layers.Conv2D(32, (3, 3), activation='relu', padding='same')(encoded)

x = layers.UpSampling2D((2, 2))(x)

x = layers.Conv2D(32, (3, 3), activation='relu', padding='same')(x)

x = layers.UpSampling2D((2, 2))(x)

decoded = layers.Conv2D(1, (3, 3), activation='sigmoid', padding='same')(x)

autoencoder = keras.Model(input_img, decoded)

autoencoder.compile(optimizer='adam', loss='binary_crossentropy')autoencoder.fit(x_train_noisy, x_train,

epochs=100,

batch_size=128,

shuffle=True,

validation_data=(x_test_noisy, x_test),

callbacks=[TensorBoard(log_dir='/tmp/tb', histogram_freq=0, write_graph=False)])

Sequence-to-sequence

timesteps = ... # Length of your sequences

input_dim = ...

latent_dim = ...

inputs = keras.Input(shape=(timesteps, input_dim))

encoded = layers.LSTM(latent_dim)(inputs)

decoded = layers.RepeatVector(timesteps)(encoded)

decoded = layers.LSTM(input_dim, return_sequences=True)(decoded)

sequence_autoencoder = keras.Model(inputs, decoded)

encoder = keras.Model(inputs, encoded)

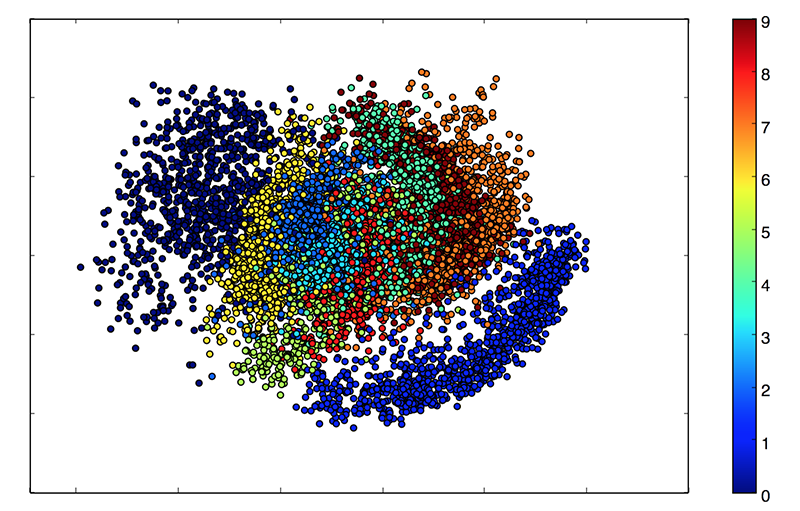

Variational autoencoder (VAE)

original_dim = 28 * 28

intermediate_dim = 64

latent_dim = 2

inputs = keras.Input(shape=(original_dim,))

h = layers.Dense(intermediate_dim, activation='relu')(inputs)

z_mean = layers.Dense(latent_dim)(h)

z_log_sigma = layers.Dense(latent_dim)(h)from keras import backend as K

def sampling(args):

z_mean, z_log_sigma = args

epsilon = K.random_normal(shape=(K.shape(z_mean)[0], latent_dim),

mean=0., stddev=0.1)

return z_mean + K.exp(z_log_sigma) * epsilon

z = layers.Lambda(sampling)([z_mean, z_log_sigma])# Create encoder

encoder = keras.Model(inputs, [z_mean, z_log_sigma, z], name='encoder')

# Create decoder

latent_inputs = keras.Input(shape=(latent_dim,), name='z_sampling')

x = layers.Dense(intermediate_dim, activation='relu')(latent_inputs)

outputs = layers.Dense(original_dim, activation='sigmoid')(x)

decoder = keras.Model(latent_inputs, outputs, name='decoder')

# instantiate VAE model

outputs = decoder(encoder(inputs)[2])

vae = keras.Model(inputs, outputs, name='vae_mlp')x_test_encoded = encoder.predict(x_test, batch_size=batch_size)

plt.figure(figsize=(6, 6))

plt.scatter(x_test_encoded[:, 0], x_test_encoded[:, 1], c=y_test)

plt.colorbar()

plt.show()

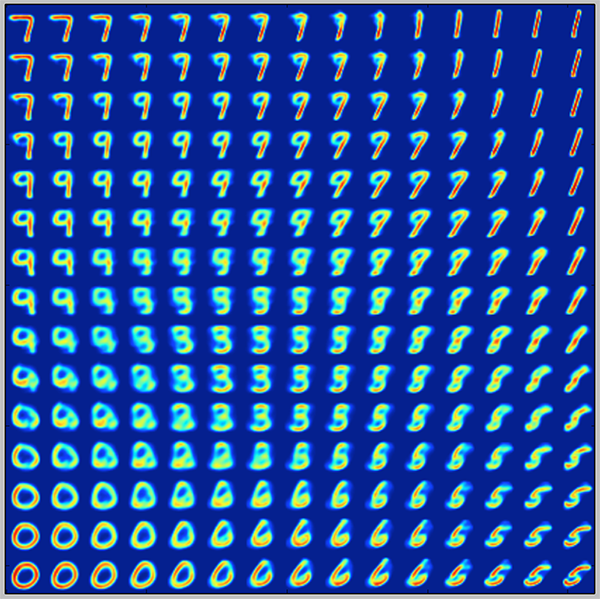

# Display a 2D manifold of the digits

n = 15 # figure with 15x15 digits

digit_size = 28

figure = np.zeros((digit_size * n, digit_size * n))

# We will sample n points within [-15, 15] standard deviations

grid_x = np.linspace(-15, 15, n)

grid_y = np.linspace(-15, 15, n)

for i, yi in enumerate(grid_x):

for j, xi in enumerate(grid_y):

z_sample = np.array([[xi, yi]])

x_decoded = decoder.predict(z_sample)

digit = x_decoded[0].reshape(digit_size, digit_size)

figure[i * digit_size: (i + 1) * digit_size,

j * digit_size: (j + 1) * digit_size] = digit

plt.figure(figsize=(10, 10))

plt.imshow(figure)

plt.show()

https://blog.keras.io/building-autoencoders-in-keras.html

Building Autoencoders in Keras

Sat 14 May 2016 By Francois Chollet In Tutorials. This post was written in early 2016. It is therefore badly outdated. In this tutorial, we will answer some common questions about autoencoders, and we will cover code examples of the following models: a sim

blog.keras.io

'Learning-driven Methodology > ML (Machine Learning)' 카테고리의 다른 글

| [Machine Learning] 의사 결정 트리 : 붓꽃 (Iris) (0) | 2022.11.29 |

|---|---|

| [Machine Learning] 이상 탐지 (Anomaly Detection) (0) | 2022.11.17 |

| [XGBoost] 보험료 예측 (0) | 2022.10.05 |

| [XGBoost] 심혈관 질환 예측 (0) | 2022.10.05 |

| [XGBoost] 심장 질환 예측 (0) | 2022.10.04 |