728x90

반응형

SMALL

GTSRB (German Traffic Sign Recognition Benchmark)

GTSRB (German Traffic Sign Recognition Benchmark)는 독일 신경정보학 연구원들이 작성한 것이다. 이것은 교통 표지판 (Traffic Sign)을 예측하기 위한 데이터이며, 평균적으로 32 x 32 크기의 작은 color 이미지와 43개 교통 표지판과 관련된 4만여 개의 이미지를 포함한다.

import tensorflow as tf

from tensorflow.keras.models import Sequential, Model

from tensorflow.keras.layers import Dense, Dropout, Input

from tensorflow.keras.layers import Flatten, Conv2D, MaxPooling2D

# 파일 다운로드

!wget https://sid.erda.dk/public/archives/daaeac0d7ce1152aea9b61d9f1e19370/GTSRB_Final_Training_Images.zip

# 또는 구글 드라이브에 저장후 마운트

from google.colab import drive

drive.mount('/content/gdrive/')

import shutil

shutil.copy('/content/gdrive/My Drive/Colab Notebooks/dataset/GTSRB_Final_Training_Images.zip', '/content/')import os

import shutil

if os.path.exists('/content/GTSRB/'): # 작업 디렉토리 GTSRB

shutil.rmtree('/content/GTSRB/')

print('/content/GTSRB/ is removed.')# 압축파일 풀기

import zipfile

with zipfile.ZipFile('/content/GTSRB_Final_Training_Images.zip', 'r') as target_file:

target_file.extractall('/content/GTSRB_Final_Training_Images/')# 데이터 전체 개수 확인

import glob

# 데이터 정답 (label) 개수 및 종류 확인

label_name_list = os.listdir('/content/GTSRB_Final_Training_Images/GTSRB/Final_Training/Images/')

print('total label nums = ', len(label_name_list))

print('=================================================')

print(label_name_list)total label nums = 43

=================================================

['00039', '00006', '00004', '00020', '00042', '00041', '00012', '00009', '00003', '00023', '00001', '00035', '00032', '00021', '00018', '00019', '00008', '00033', '00015', '00029', '00027', '00038', '00000', '00026', '00025', '00005', '00014', '00010', '00036', '00016', '00017', '00011', '00040', '00007', '00024', '00022', '00030', '00031', '00034', '00028', '00037', '00002', '00013']import cv2

import numpy as np

from datetime import datetime

image_list = []

label_list = []

image_base_dir = '/content/GTSRB_Final_Training_Images/GTSRB/Final_Training/Images/'

image_label_list = os.listdir(image_base_dir) # 정답이름

print('label nums => ', len(image_label_list))

start_time = datetime.now()

for label_name in image_label_list:

# 이미지 파일 읽어오기

file_path = image_base_dir + label_name

img_file_list = glob.glob(file_path+'/*.ppm')

# 각각의 정답 디렉토리에 있는 이미지 파일, 즉 .ppm 파일 읽어서 리스트에 저장

for img_file in img_file_list:

src_img = cv2.imread(img_file, cv2.IMREAD_COLOR)

src_img = cv2.resize(src_img, dsize=(32,32))

src_img = cv2.cvtColor(src_img, cv2.COLOR_BGR2RGB)

image_list.append(src_img)

label_list.append(float(label_name)) # 정답은 문자열을 실수로 변환

# numpy 변환

x_train = np.array(image_list).astype('float32')

y_train = np.array(label_list).astype('float32')

print('x_train.shape = ', x_train.shape, ', y_train.shape = ', y_train.shape)

end_time = datetime.now()

print('train data generation time => ', end_time-start_time)label nums => 43

x_train.shape = (39209, 32, 32, 3) , y_train.shape = (39209,)

train data generation time => 0:00:03.314970s = np.arange(len(x_train))

print('x_train len = ', s)

# index random shuffle

np.random.shuffle(s)

# x_train, y_train 재 생성

x_train = x_train[s]

y_train = y_train[s]x_train len = [ 0 1 2 ... 39206 39207 39208]ratio = 0.2

split_num = int(ratio*len(x_train))

print('split num => ', split_num)

x_test = x_train[0:split_num]

y_test = y_train[0:split_num]

x_train = x_train[split_num:]

y_train = y_train[split_num:]split num => 7841print('x_train.shape = ', x_train.shape, ', y_train.shape = ', y_train.shape)

print('x_test.shape = ', x_test.shape, ', y_test.shape = ', y_test.shape)x_train.shape = (31368, 32, 32, 3) , y_train.shape = (31368,)

x_test.shape = (7841, 32, 32, 3) , y_test.shape = (7841,)print(y_train[:3])

print(y_test[:3])[38. 22. 39.]

[26. 4. 11.]# 정규화

x_train = x_train.astype(np.float32) / 255.0

x_test = x_test.astype(np.float32) / 255.0

# one-hot encoding 하기 위해서는, 정답에 대해서 다음과 같이 실행함

#y_train = tf.keras.utils.to_categorical(y_train, num_classes=n_classes)

#y_test = tf.keras.utils.to_categorical(y_test, num_classes=n_classes)

import matplotlib.pyplot as plt

random_index = np.random.randint(0, len(x_train), 16) # 16개 랜덤 train 데이터 추출

plt.figure(figsize=(8,8))

for pos in range(len(random_index)):

plt.subplot(4,4,pos+1)

plt.axis('off')

train_img_index = random_index[pos]

plt.imshow(x_train[train_img_index])

plt.tight_layout()

plt.show()

n_classes = len(os.listdir('/content/GTSRB_Final_Training_Images/GTSRB/Final_Training/Images/')) # 정답 개수

cnn = Sequential()

cnn.add(Conv2D(input_shape=(32,32,3), kernel_size=(3,3), filters=32, activation='relu'))

cnn.add(Conv2D(kernel_size=(3,3), filters=64, activation='relu'))

cnn.add(MaxPooling2D(pool_size=(2,2)))

cnn.add(Dropout(0.25))

cnn.add(Flatten())

cnn.add(Dense(512, activation='relu'))

cnn.add(Dropout(0.5))

cnn.add(Dense(n_classes, activation='softmax'))

cnn.summary()Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 30, 30, 32) 896

conv2d_1 (Conv2D) (None, 28, 28, 64) 18496

max_pooling2d (MaxPooling2D (None, 14, 14, 64) 0

)

dropout (Dropout) (None, 14, 14, 64) 0

flatten (Flatten) (None, 12544) 0

dense (Dense) (None, 512) 6423040

dropout_1 (Dropout) (None, 512) 0

dense_1 (Dense) (None, 43) 22059

=================================================================

Total params: 6,464,491

Trainable params: 6,464,491

Non-trainable params: 0

_________________________________________________________________from tensorflow.keras.callbacks import ModelCheckpoint, EarlyStopping

cnn.compile(loss='sparse_categorical_crossentropy',

optimizer=tf.keras.optimizers.Adam(), metrics=['accuracy'])

save_file_name = './GTSRB_Native_Colab.h5'

checkpoint = ModelCheckpoint(save_file_name, # file명을 지정

monitor='val_loss', # val_loss 값이 개선되었을때 호출

verbose=1, # 로그를 출력

save_best_only=True, # 가장 best 값만 저장

mode='auto'

)

earlystopping = EarlyStopping(monitor='val_loss', # 모니터 기준 설정 (val loss)

patience=5, # 5회 Epoch동안 개선되지 않는다면 종료

)

start_time = datetime.now()

hist = cnn.fit(x_train, y_train,

batch_size=32, epochs=30,

validation_data=(x_test, y_test),

callbacks=[checkpoint, earlystopping])

end_time = datetime.now()

print('elapsed time => ', end_time-start_time)cnn.evaluate(x_test, y_test)best_model = tf.keras.models.load_model('./GTSRB_Native_Colab.h5')best_model.evaluate(x_test, y_test)y_pred = cnn.predict(x_test)

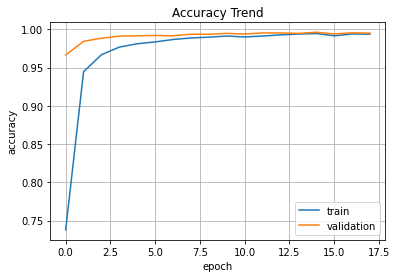

print(y_pred.shape)(7841, 43)plt.plot(hist.history['accuracy'], label='train')

plt.plot(hist.history['val_accuracy'], label='validation')

plt.title('Accuracy Trend')

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.legend(loc='best')

plt.grid()

plt.show()

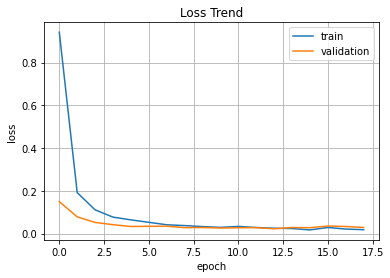

plt.plot(hist.history['loss'], label='train')

plt.plot(hist.history['val_loss'], label='validation')

plt.title('Loss Trend')

plt.ylabel('loss')

plt.xlabel('epoch')

plt.legend(loc='best')

plt.grid()

plt.show()

728x90

반응형

LIST

'Visual Intelligence > Image Deep Learning' 카테고리의 다른 글

| [시각 지능] 전이 학습 (Transfer Learning) (0) | 2022.08.14 |

|---|---|

| [시각 지능] Surface Crack Detection (0) | 2022.08.14 |

| [시각 지능] Google Photos Prototype (0) | 2022.08.13 |

| [시각 지능] 사전 학습된 CIFAR-10 모델로 이미지 예측 (1) | 2022.08.13 |

| [시각 지능] CIFAR-10 (0) | 2022.08.07 |