728x90

반응형

SMALL

CNN Basic Architecture

|

import tensorflow as tf

from tensorflow.keras.layers import Flatten, Dense, Conv2D, MaxPool2D

from tensorflow.keras.models import Sequential

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.datasets import mnist

import numpy as np

from datetime import datetime

import matplotlib.pyplot as plt

print(tf.__version__)2.8.2(x_train, t_train), (x_test, t_test) = mnist.load_data()

x_train = x_train / 255.0

x_test = x_test / 255.0

print('x_train.shape = ', x_train.shape, ' , x_test.shape = ', x_test.shape)

print('t_train.shape = ', t_train.shape, ' , t_test.shape = ', t_test.shape)Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/mnist.npz

11493376/11490434 [==============================] - 0s 0us/step

11501568/11490434 [==============================] - 0s 0us/step

x_train.shape = (60000, 28, 28) , x_test.shape = (10000, 28, 28)

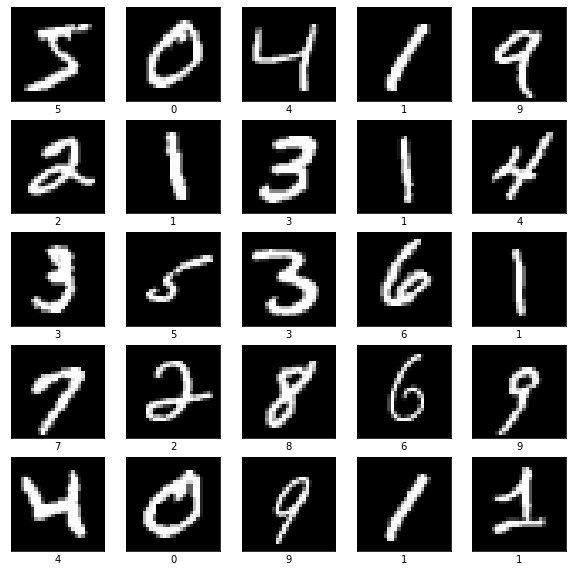

t_train.shape = (60000,) , t_test.shape = (10000,)# 데이터 출력

plt.figure(figsize=(10,10))

for index in range(25):

plt.subplot(5, 5, index+1)

plt.xticks([])

plt.yticks([])

plt.grid(False)

plt.imshow(x_train[index], cmap='gray')

plt.xlabel(str(t_train[index]))

plt.show()

one-hot encoding

# one hot encoding

t_train = to_categorical(t_train, 10)

t_test = to_categorical(t_test, 10)

model = Sequential()

model.add(Conv2D(input_shape=(28,28,1),

kernel_size=3, filters=32,

strides=(1,1), activation='relu', padding='SAME'))

model.add(MaxPool2D(pool_size=(2,2), padding='SAME'))

model.add(Flatten())

model.add(Dense(10, activation='softmax'))

# model compile

# one hot encoding 방식이기 때문에, loss=categorical_crossentropy 정의

model.compile(optimizer=Adam(learning_rate=0.001),

loss='categorical_crossentropy', metrics=['accuracy'])

model.summary()Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 28, 28, 32) 320

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 14, 14, 32) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 6272) 0

_________________________________________________________________

dense_1 (Dense) (None, 10) 62730

=================================================================

Total params: 63,050

Trainable params: 63,050

Non-trainable params: 0

_________________________________________________________________

sparse

# model compile

# one hot encoding 방식이 아니기 때문에, loss=sparse_categorical_crossentropy 정의

model = Sequential()

model.add(Conv2D(input_shape = (28, 28, 1),

kernel_size = 3, filters = 32,

strides = (1, 1), activation = 'relu', use_bias = True, padding = 'SAME'))

model.add(MaxPool2D(pool_size = (2, 2), padding = 'SAME'))

model.add(Flatten())

model.add(Dense(10, activation = 'softmax'))

model.compile(optimizer = Adam(learning_rate = 0.001), loss = 'sparse_categorical_crossentropy', metrics = ['accuracy'])

model.summary()Model: "sequential_7"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_4 (Conv2D) (None, 28, 28, 32) 320

max_pooling2d_3 (MaxPooling (None, 14, 14, 32) 0

2D)

flatten_2 (Flatten) (None, 6272) 0

dense_2 (Dense) (None, 10) 62730

=================================================================

Total params: 63,050

Trainable params: 63,050

Non-trainable params: 0

_________________________________________________________________start_time = datetime.now()

hist = model.fit(x_train.reshape(-1,28,28,1), t_train,

batch_size=50, epochs=50, validation_split=0.2)

end_time = datetime.now()

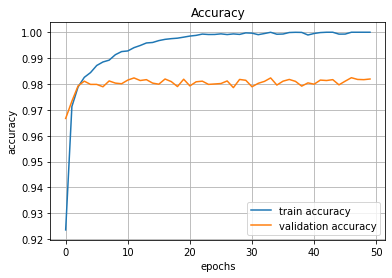

print('\n\nElapsed Time => ', end_time - start_time)model.evaluate(x_test.reshape(-1, 28, 28, 1), t_test)313/313 [==============================] - 3s 8ms/step - loss: 0.1100 - accuracy: 0.9825

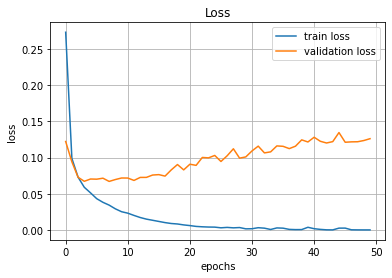

[0.10999356955289841, 0.9825000166893005]plt.title('Loss')

plt.grid()

plt.xlabel('epochs')

plt.ylabel('loss')

plt.plot(hist.history['loss'], label='train loss')

plt.plot(hist.history['val_loss'], label='validation loss')

plt.legend(loc='best')

plt.show()

plt.title('Accuracy')

plt.grid()

plt.xlabel('epochs')

plt.ylabel('accuracy')

plt.plot(hist.history['accuracy'], label='train accuracy')

plt.plot(hist.history['val_accuracy'], label='validation accuracy')

plt.legend(loc='best')

plt.show()

테스트 데이터의 정답과 예측 값 비교

rand_idx = np.random.randint(len(x_test))

print(rand_idx)

predicted_val = model.predict(x_test[rand_idx].reshape(-1,28,28,1))

print(type(predicted_val), predicted_val.shape)

print(predicted_val)

print(np.argmax(predicted_val), t_test[rand_idx])3168

<class 'numpy.ndarray'> (1, 10)

[[2.9477740e-31 1.0732472e-25 1.2657751e-20 3.5341849e-13 1.3210143e-16

1.9856330e-19 1.2783073e-30 2.5408397e-16 5.2557976e-16 1.0000000e+00]]

9 9# x_test를 4차원 tensor로 변환

x_test = x_test.reshape(-1, 28, 28, 1)

print(x_test.shape)(10000, 28, 28, 1)# 중복하지 않고 random index 추출

rand_idx = np.random.choice(len(x_test), 3, replace=False)

print(rand_idx)rand_idx = np.random.choice(len(x_test), 3, replace=False)

print(rand_idx)# axis=-1 이용하여 행 기준으로 predict 결과 추출

predicted_val = model.predict(x_test[rand_idx])

print(type(predicted_val), predicted_val.shape)

print(np.argmax(predicted_val, axis=-1), t_test[rand_idx])# np.array() 이용하여 4차원 tensor 변환

test_data_list = [ x_test[idx] for idx in rand_idx ]

print(len(test_data_list))

test_data_array = np.array(test_data_list)

print(test_data_array.shape)3

(3, 28, 28, 1)predicted_val = model.predict(test_data_array)

print(type(predicted_val), predicted_val.shape)

print(np.argmax(predicted_val, axis=-1), t_test[rand_idx])728x90

반응형

LIST

'Visual Intelligence > Image Deep Learning' 카테고리의 다른 글

| [시각 지능] CNN 특징맵 ∙ 풀링맵 시각화 (0) | 2022.08.07 |

|---|---|

| [시각 지능] false prediction (0) | 2022.08.06 |

| [시각 지능] CNN (Convolutional Neural Network) (0) | 2022.08.06 |

| [시각 지능] 컨벌루션 (Convolution) (0) | 2022.07.31 |

| [시각 지능] Fashion MNIST (0) | 2022.07.31 |