728x90

반응형

SMALL

Bootstrapping for Classification

분류 부트스트래핑은 StratifiedShuffleSplit 클래스를 사용하여 분할을 수행한다. 이 클래스는 표본 추출이 비율에 영향을 미치지 않도록 클래스가 균형을 이루므로 교차 검증을 위한 StratifiedKFold와 유사하다.

import pandas as pd

import os

import numpy as np

import time

import statistics

from sklearn import metrics

from sklearn.model_selection import StratifiedKFold

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Activation

from tensorflow.keras import regularizers

from tensorflow.keras.callbacks import EarlyStopping

from sklearn.model_selection import StratifiedShuffleSplit

EPOCHS = 500

SPLITS = 50

# Bootstrap

boot = StratifiedShuffleSplit(n_splits = SPLITS, test_size =0.1, random_state=42)

# Track progress

mean_benchmark = []

epochs_needed = []

num = 0

fold = 0

for train, test in boot.split(x):

start_time = time.time()

num += 1

x_train = x[train]

y_train = y[train]

x_test= x[test]

y_test= y[test]

model = Sequential()

model.add(Dense(50, input_dim = x.shape[1], activation = 'relu'))

model.add(Dense(25, activation = 'relu'))

model.add(Dense(y.shape[1], activation = 'softmax'))

model.compile(loss = 'categorical_crossentropy', optimizer = 'adam')

monitor = EarlyStopping(monitor = 'val_loss', min_delta = 1e −3,

patience = 25, verbose = 0, mode = 'auto', restore_best_weights = True)

model.fit(x_train, y_train, validation_data = (x_test, y_test), callbacks = [monitor], verbose =0, epochs = 1000)

epochs = monitor.stopped_epoch

epochs_needed.append(epochs)

pred = model.predict(x_test)

score = np.sqrt(metrics.mean_squared_error(pred, y_test))

mean_benchmark.append(score)

m1 = statistics.mean(mean_benchmark)

m2 = statistics.mean(epochs_needed)

mdev = statistics.pstdev(mean_benchmark)

# Record this iteration

time_took = time.time() − start_time

print(f"#{num} : score = {score : .6f}, mean score = {m1:.6f}",

f"stdev = {mdev : .6f}",

f"epochs = {epochs}, mean epochs = {int(m2)}",

f"time = {hms_string(time_took)}")

Benchmarking

이제 분류와 회귀를 모두 사용하여 부트스트랩하는 방법을 살펴보았으므로 데이터에 대한 하이퍼 파라미터 최적화를 시도할 수 있다. 평가는 로그 손실 상태가 된다.

import pandas as pd

import os

import numpy as np

import time

import statistics

from sklearn import metrics

from sklearn.model_selection import StratifiedKFold

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Activation, Dropout

from tensorflow.keras import regularizers

from tensorflow.keras.callbacks import EarlyStopping

from sklearn.model_selection import StratifiedShuffleSplit

from tensorflow.keras.layers import LeakyReLU, PReLU

SPLITS = 100

# Bootstrap

boot = StratifiedShuffleSplit(n_splits = SPLITS, test_size =0.1)

# Track progress

mean_benchmark = []

epochs_needed = []

num = 0

fold = 0

for train, test in boot.split(x):

start_time = time.time()

num += 1

x_train = x[train]

y_train = y[train]

x_test= x[test]

y_test= y[test]

model = Sequential()

model.add(Dense(100, input_dim = x.shape[1], activation = PReLU(), kernel_r_regularizer = regularizers.l2(1e−4)))

model.add(Dropout(0.5))

model.add(Dense(100, activation = PReLU(), kernel_r_regularizer = regularizers.l2(1e−4)))

model.add(Dropout(0.5))

model.add(Dense(100, activation = PReLU(), kernel_r_regularizer = regularizers.l2(1e−4)))

# model.add(Dropout(0.5)) − Usually better performance

model.add(Dense(y.shape[1], activation = 'softmax'))

model.compile(loss = 'categorical_crossentropy', optimizer = 'adam')

monitor = EarlyStopping(monitor = 'val_loss', min_delta = 1e −3,

patience = 100, verbose = 0, mode = 'auto', restore_best_weights = True)

model.fit(x_train, y_train, validation_data = (x_test, y_test), callbacks = [monitor], verbose =0, epochs = 1000)

epochs = monitor.stopped_epoch

epochs_needed.append(epochs)

pred = model.predict(x_test)

score = np.sqrt(metrics.mean_squared_error(pred, y_test))

mean_benchmark.append(score)

m1 = statistics.mean(mean_benchmark)

m2 = statistics.mean(epochs_needed)

mdev = statistics.pstdev(mean_benchmark)

# Record this iteration

time_took = time.time() − start_time

print(f"#{num} : score = {score : .6f}, mean score = {m1:.6f}",

f"stdev = {mdev : .6f}",

f"epochs = {epochs}, mean epochs = {int(m2)}",

f"time = {hms_string(time_took)}")728x90

반응형

LIST

'DNN with Keras > Regularization and Dropout' 카테고리의 다른 글

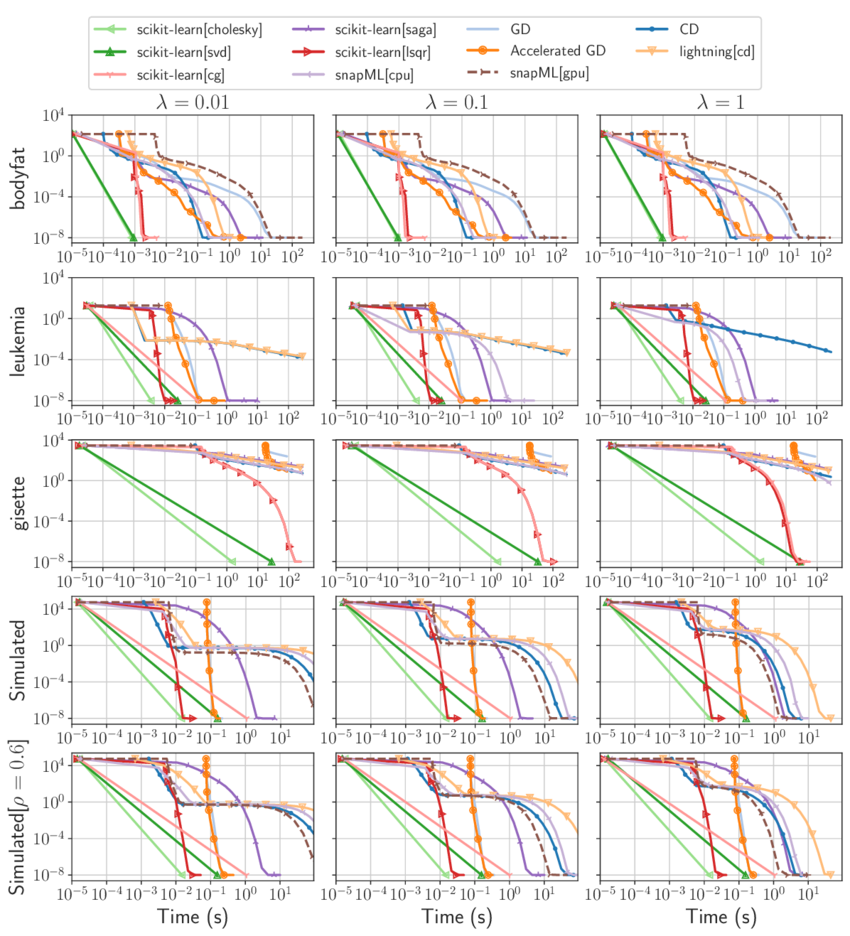

| 정규화 기술 벤치마킹 (1) (회귀) (0) | 2023.07.24 |

|---|---|

| 과적합을 줄이기 위한 드롭아웃 (0) | 2023.07.24 |

| 과적합을 줄이기 위한 L1 및 L2 정규화 (0) | 2023.07.24 |

| 홀드아웃 (Holdout) 방법 (0) | 2023.07.24 |

| K-Fold Cross-validation (0) | 2023.07.24 |