728x90

반응형

SMALL

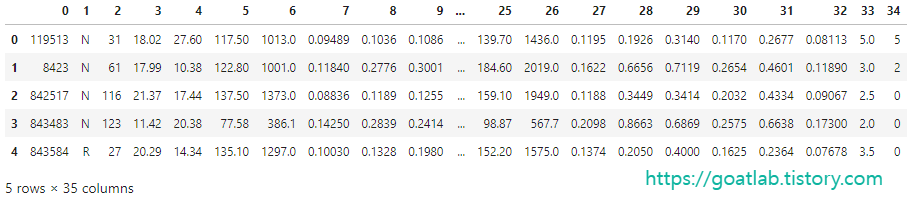

wpbc 데이터셋

특징은 유방 종괴의 미세 바늘 흡인물 (FNA)의 디지털화된 이미지에서 계산된다. 이것은 이미지에 존재하는 세포 핵의 특성을 설명한다. (https://archive.ics.uci.edu/ml/datasets/Breast+Cancer+Wisconsin+%28Diagnostic%29)

import pandas as pd

df = pd.read_csv('wpbc_data.csv', header=None)

df.head()

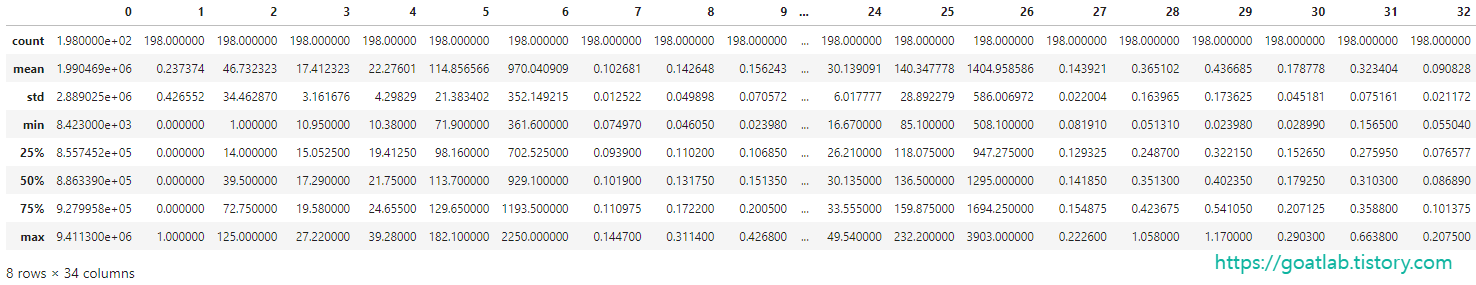

# Outcome 값 변경

df.loc[df[1]=='N',1] = 0

df.loc[df[1]=='R',1] = 1

df[1] = df[1].astype('int32')

df.describe()

# 결측치 제거 및 Outcome 비율 확인

for key in df.keys():

df.loc[df[key]=='?',key] = None

df.dropna(inplace=True)

df.reset_index(inplace=True,drop=True)

df[1].value_counts()0.0 148

1.0 46

Name: 1, dtype: int64

데이터 전처리

# 훈련 데이터, 검증 데이터, 테스트 데이터로 나누기

from sklearn.model_selection import train_test_split

train_features, test_features, train_target, test_target = train_test_split(features, outcome, stratify=outcome, test_size=0.3)

train_features, val_features, train_target, val_target = train_test_split(train_features, train_target, stratify=train_target, test_size=0.3)

데이터 스케일링

from sklearn.preprocessing import MinMaxScaler

feature_scaler = MinMaxScaler()

train_features_scaled = feature_scaler.fit_transform(train_features)

val_features_scaled = feature_scaler.transform(val_features)

test_features_scaled = feature_scaler.transform(test_features)

데이터 불균형이 심한 데이터셋 훈련

from xgboost import XGBClassifier

from xgboost.callback import EarlyStopping

xgb = XGBClassifier()

xgb.fit(train_features_scaled, train_target)

result = xgb.predict(test_features_scaled)# 데이터 불균형이 심한 데이터셋 성능 평가

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

print('Accuracy :',accuracy_score(test_target, result))

print('Precision :',precision_score(test_target, result))

print('Recall :',recall_score(test_target, result))

print('F1 score :',f1_score(test_target, result))Accuracy : 0.7966101694915254

Precision : 0.6666666666666666

Recall : 0.2857142857142857

F1 score : 0.4# 데이터 불균형이 심한 데이터에서 중요한 학습 특징 확인

%matplotlib inline

from xgboost import plot_importance

import matplotlib.pyplot as plt

fig, ax = plt.subplots(figsize=(10, 12))

plot_importance(xgb,ax=ax)

오버샘플링

from imblearn.over_sampling import SMOTE

sm = SMOTE(random_state=42, n_jobs=-1)

train_features_resampled, train_target_resampled = sm.fit_resample(train_features_scaled, train_target)# 오버샘플링 수행 후 알고리즘 훈련

from xgboost import XGBClassifier

from xgboost.callback import EarlyStopping

xgb = XGBClassifier()

early_stop = EarlyStopping(rounds=20,metric_name='error',data_name="validation_0",save_best=True)

xgb.fit(train_features_resampled, train_target_resampled,eval_set=[(val_features_scaled, val_target)], eval_metric='error', callbacks=[early_stop])

result = xgb.predict(test_features_scaled)# 오버샘플링 수행 후 알고리즘 성능 평가

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

print('Accuracy :',accuracy_score(test_target, result))

print('Precision :',precision_score(test_target, result))

print('Recall :',recall_score(test_target, result))

print('F1 score :',f1_score(test_target, result))Accuracy : 0.6949152542372882

Precision : 0.3888888888888889

Recall : 0.5

F1 score : 0.43750000000000006728x90

반응형

LIST

'Learning-driven Methodology > ML (Machine Learning)' 카테고리의 다른 글

| [XGBoost] 심혈관 질환 예측 (0) | 2022.10.05 |

|---|---|

| [XGBoost] 심장 질환 예측 (0) | 2022.10.04 |

| [XGBoost] 위스콘신 유방암 데이터 (2) (0) | 2022.10.04 |

| [XGBoost] 위스콘신 유방암 데이터 (1) (0) | 2022.10.04 |

| [Machine Learning] 앙상블 (Ensemble) (0) | 2022.10.04 |