How to Integrate Machine Learning into an Android App

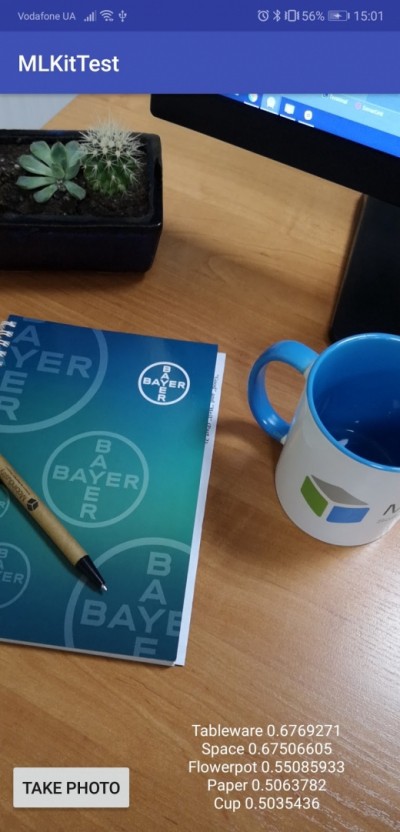

머신러닝과 객체 인식은 오늘날 모바일 개발에서 가장 뜨거운 두 가지 주제이다. 객체 인식은 기계 학습의 큰 부분이며 전자 상거래, 의료, 미디어 및 교육과 같은 영역에서 사용할 수 있다. 이 기사에서는 이미지 레이블 지정 예제를 사용하여 기계 학습을 Android 앱에 통합하는 프로세스를 보여준다.

머신 비전 시장은 빠르게 성장하고 있으며 많은 세계 최대 기술 회사들이 새로운 머신러닝 도구에 투자하고 있다. 이러한 도구를 통해 개발자는 머신 러닝과 머신 비전을 모바일 애플리케이션에 통합할 수 있다.

Tutorial on image labeling

첫 번째 단계는 Firebase 서비스에 연결하는 것이다. 이렇게 하려면 Firebase 콘솔에 들어가 새 프로젝트를 만들어야 한다.

그런 다음, gradle 파일에 다음 종속성을 추가해야 한다.

implementation 'com.google.firebase:firebase-ml-vision:18.0.1'

implementation 'com.google.firebase:firebase-ml-vision-image-label-model:17.0.2'

기기 내 API를 사용하는 경우 Play 스토어에서 앱을 설치한 후 기기에 ML 모델을 자동으로 다운로드하도록 앱을 구성한다. 이렇게 하려면 앱의 AndroidManifest.xml 파일 에 다음 선언을 추가해야 한다.

<meta-data

android:name="com.google.firebase.ml.vision.DEPENDENCIES"

android:value="label" />

그리고 화면 레이아웃을 만든다.

<?xml version="1.0" encoding="utf-8"?>

<android.support.constraint.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<LinearLayout

android:id="@+id/layout_preview"

android:layout_width="0dp"

android:layout_height="0dp"

android:orientation="horizontal"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toTopOf="parent" />

<Button

android:id="@+id/btn_take_picture"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginStart="8dp"

android:layout_marginBottom="16dp"

android:text="Take Photo"

android:textSize="15sp"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintStart_toStartOf="parent" />

<TextView

android:id="@+id/txt_result"

android:layout_width="0dp"

android:layout_height="wrap_content"

android:layout_marginStart="16dp"

android:layout_marginEnd="16dp"

android:textAlignment="center"

android:textColor="@android:color/white"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toEndOf="@+id/btn_take_picture" />

</android.support.constraint.ConstraintLayout>

SurfaceView를 사용하여 카메라 미리보기를 표시한다. Manifest.xml에 카메라 권한도 추가해야 한다.

android:name="android.permission.CAMERA

SurfaceView를 확장하고 SurfaceHolder.Callback 인터페이스를 구현하는 클래스를 만든다.

import java.io.IOException;

import android.content.Context;

import android.hardware.Camera;

import android.util.Log;

import android.view.SurfaceHolder;

import android.view.SurfaceView;

public class CameraPreview extends SurfaceView implements SurfaceHolder.Callback {

private SurfaceHolder mHolder;

private Camera mCamera;

public CameraPreview(Context context, Camera camera) {

super(context);

mCamera = camera;

mHolder = getHolder();

mHolder.addCallback(this);

// deprecated setting, but required on Android versions prior to 3.0

mHolder.setType(SurfaceHolder.SURFACE_TYPE_PUSH_BUFFERS);

}

public void surfaceCreated(SurfaceHolder holder) {

try {

// create the surface and start camera preview

if (mCamera == null) {

mCamera.setPreviewDisplay(holder);

mCamera.startPreview();

}

} catch (IOException e) {

Log.d(VIEW_LOG_TAG, "Error setting camera preview: " + e.getMessage());

}

}

public void refreshCamera(Camera camera) {

if (mHolder.getSurface() == null) {

// preview surface does not exist

return;

}

// stop preview before making changes

try {

mCamera.stopPreview();

} catch (Exception e) {

// ignore: tried to stop a non-existent preview

}

// set preview size and make any resize, rotate or

// reformatting changes here

// start preview with new settings

setCamera(camera);

try {

mCamera.setPreviewDisplay(mHolder);

mCamera.startPreview();

} catch (Exception e) {

Log.d(VIEW_LOG_TAG, "Error starting camera preview: " + e.getMessage());

}

}

public void surfaceChanged(SurfaceHolder holder, int format, int w, int h) {

// If your preview can change or rotate, take care of those events here.

// Make sure to stop the preview before resizing or reformatting it.

refreshCamera(mCamera);

}

public void setCamera(Camera camera) {

//method to set a camera instance

mCamera = camera;

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

}

}

마지막으로 해야 할 일은 앱의 메인 화면에 대한 로직을 추가하는 것이다.

|

import android.Manifest;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.hardware.Camera;

import android.support.annotation.NonNull;

import android.support.v7.app.AppCompatActivity;

import android.os.Bundle;

import android.view.WindowManager;

import android.widget.LinearLayout;

import android.widget.TextView;

import android.widget.Toast;

import com.google.android.gms.tasks.OnFailureListener;

import com.google.android.gms.tasks.OnSuccessListener;

import com.google.firebase.ml.vision.FirebaseVision;

import com.google.firebase.ml.vision.common.FirebaseVisionImage;

import com.google.firebase.ml.vision.label.FirebaseVisionLabel;

import com.google.firebase.ml.vision.label.FirebaseVisionLabelDetector;

import com.google.firebase.ml.vision.label.FirebaseVisionLabelDetectorOptions;

import com.gun0912.tedpermission.PermissionListener;

import com.gun0912.tedpermission.TedPermission;

import java.util.ArrayList;

import java.util.List;

import butterknife.BindView;

import butterknife.ButterKnife;

import butterknife.OnClick;

public class MainActivity extends AppCompatActivity {

private Camera mCamera;

private CameraPreview mPreview;

private Camera.PictureCallback mPicture;

@BindView(R.id.layout_preview)

LinearLayout cameraPreview;

@BindView(R.id.txt_result)

TextView txtResult;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

ButterKnife.bind(this);

//keep screen always on getWindow().addFlags(WindowManager.LayoutParams.FLAG_KEEP_SCREEN_ON);

checkPermission();

}

//camera permission is a dangerous permission, so the user should grant this permission directly in real time. Here we show a permission pop-up and listen for the user’s response.

private void checkPermission() {

//Set up the permission listener

PermissionListener permissionlistener = new PermissionListener() {

@Override

public void onPermissionGranted() {

setupPreview();

}

@Override

public void onPermissionDenied(ArrayList deniedPermissions) {

Toast.makeText(MainActivity.this, "Permission Denied\n" + deniedPermissions.toString(), Toast.LENGTH_SHORT).show();

}

};

//Check camera permission

TedPermission.with(this)

.setPermissionListener(permissionlistener)

.setDeniedMessage("If you reject permission,you can not use this service\n\nPlease turn on permissions at [Setting] > [Permission]")

.setPermissions(Manifest.permission.CAMERA)

.check();

}

//Here we set up the camera preview

private void setupPreview() {

mCamera = Camera.open();

mPreview = new CameraPreview(getBaseContext(), mCamera);

try {

//Set camera autofocus

Camera.Parameters params = mCamera.getParameters();

params.setFocusMode(Camera.Parameters.FOCUS_MODE_CONTINUOUS_PICTURE);

mCamera.setParameters(params);

}catch (Exception e){

}

cameraPreview.addView(mPreview);

mCamera.setDisplayOrientation(90);

mCamera.startPreview();

mPicture = getPictureCallback();

mPreview.refreshCamera(mCamera);

}

// take photo when the users tap the button

@OnClick(R.id.btn_take_picture)

public void takePhoto() {

mCamera.takePicture(null, null, mPicture);

}

@Override

protected void onPause() {

super.onPause();

//when on Pause, release camera in order to be used from other applications

releaseCamera();

}

private void releaseCamera() {

if (mCamera != null) {

mCamera.stopPreview();

mCamera.setPreviewCallback(null);

mCamera.release();

mCamera = null;

}

}

//Here we get the photo from the camera and pass it to mlkit processor

private Camera.PictureCallback getPictureCallback() {

return new Camera.PictureCallback() {

@Override

public void onPictureTaken(byte[] data, Camera camera) {

mlinit(BitmapFactory.decodeByteArray(data, 0, data.length));

mPreview.refreshCamera(mCamera);

}

};

}

//the main method that processes the image from the camera and gives labeling result

private void mlinit(Bitmap bitmap) {

//By default, the on-device image labeler returns at most 10 labels for an image.

//But it is too much for us and we wants to get less

FirebaseVisionLabelDetectorOptions options =

new FirebaseVisionLabelDetectorOptions.Builder()

.setConfidenceThreshold(0.5f)

.build();

//To label objects in an image, create a FirebaseVisionImage object from a bitmap

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(bitmap);

//Get an instance of FirebaseVisionCloudLabelDetector

FirebaseVisionLabelDetector detector = FirebaseVision.getInstance()

.getVisionLabelDetector(options);

detector.detectInImage(image)

.addOnSuccessListener(

new OnSuccessListener>() {

@Override

public void onSuccess(List labels) {

StringBuilder builder = new StringBuilder();

// Get information about labeled objects

for (FirebaseVisionLabel label : labels) {

builder.append(label.getLabel())

.append(" ")

.append(label.getConfidence()).append("\n");

}

txtResult.setText(builder.toString());

}

})

.addOnFailureListener(

new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

txtResult.setText(e.getMessage());

}

});

}

}

How to Integrate Machine Learning into an Android App: Best Image Recognition Tools

Machine learning and object recognition are two of the hottest topics in mobile development today. Object recognition is a big part of…

medium.com

'App Programming > Android Studio' 카테고리의 다른 글

| [Android Studio] TensorFlow Lite 숫자 판별 (0) | 2022.09.02 |

|---|---|

| [Android Studio] Waiting for Target Devices to Come Online (0) | 2022.08.26 |

| [Android Studio] MPAndroidChart (0) | 2022.08.12 |

| [Android Studio] CalendarView (0) | 2022.08.12 |

| [Android Studio] SVG 이미지 파일 추가 (0) | 2022.08.10 |